Why WebAssembly Is a Viable Choice for Cloud Native Apps.

Should containers start packing?

You can think of cloud native applications as applications that are "native to the cloud". Applications that are built for the cloud, aware of if and are thereby designed to take full advantage of modern cloud computing platforms.

To ensure an application takes full advantage of the available compute resources, we must also ensure that it's not hindered by other application-level routines with which it may be sharing resources. To achieve this, we usually break our applications into different modules and have them run independently with pseudo-dedicated resources. And since each module might have its own runtime dependencies, we bundle these together into something called a container, a piece of software that packs together our module and its runtime requirements. These kinds of modules are now what we call microservices.

It used to be the case that you would have to purchase physical space on a remote server, run a virtual machine (VM) on it and if any part of your app needs to scale (i.e needs more resources), you buy a new space and spin out a new VM on it and so on. And by what we know, virtual machines are not usually small in size, they can be quite large, and they run like beasts in the memory -- you pay for all of that.

With the microservices model, you only need to spin out a new container instance in the same VM and it (with the code it runs) can independently scale as needed. That's the dream. Except, there is a problem.

Since applications are now as services, a service can stay idle for a quite a long time. Imagine an ecommerce website that has a little account recovery module for resetting customers' accounts. If this is deployed as a microservice and no one needs to recover their accounts in say -- 5 months, then it stays idle -- mounting on your bills. We can't at the same time turn the service off -- what if someone suddenly needs to reset their account while we are asleep?

So service providers came up with a trick to this problem: break some parts of your applications into functions and have those functions be in those containers we talked about. So when the functionality a function provides is needed, its environment is quickly booted up to serve the request after which it goes back to sleep. These functions are what is referred to as serverless functions because you don't buy VM spaces anymore, you don't deploy container anymore, you don't manage server essentially -- just functions. And everything else is managed by the provider.

This approach is cheap as you only pay for what resource your function consumes before it returns to sleep. The problem now is that this booting-up process called cold start is not merciful on time as it involves starting the container, which is necessary to make sure your application stays isolated; in the container, we then start up the runtime environment before we then load our function. This, in practice takes seconds -- and seconds is a LOT of time in 202X!

There are at least two causes of this problem: the first is that containers aren't exactly light-weight, although they are lighter than VMs and are very suitable for the original microservice approach, but when you need to run a single function (literally) but you need to wake up a 200MB container to achieve that, perhaps you would be better off doing the dishes. The second is that the runtime environments aren't exactly performant either; Ruby, JavaScript (Node), Python, which are the popular languages for deploying functions all run in relatively heavy (even if JIT-ed) environments.

So where does WebAssembly fit in here?

The WebAssembly (Wasm) platform is the environment for running a specific binary format. The "platform" is usually a virtual machine that reads this binary format and converts them to native binaries, the native 1s and 0s that make languages like C run very fast. This make WebAssembly applications run at near-native speed.

There are very attractive benefits to this:

WebAssembly binary format is very compact. That is, what eventually gets to run in the Wasm VM is a lightweight, single binary file. This is because the WebAssembly specification generally frowns at un-optimised binaries, thereby driving many to-Wasm compiler makers ensure that the resulting binaries are both space and time optimised.

The WebAssembly runtime itself is light. The Wasm VMs are generally small, between 10-30-40MBs and they start up quickly, execute cheaply at near-native performance and shut down quickly. So when you startup the Wasm runtime, you are sure you are not waking up a 500MB+ beast from sleep, making them suitable for edge devices like the ones used in IoT.

Wasm runs the code at near-native speed. That's the whole point of its existence. Read my introduction to Wasm.

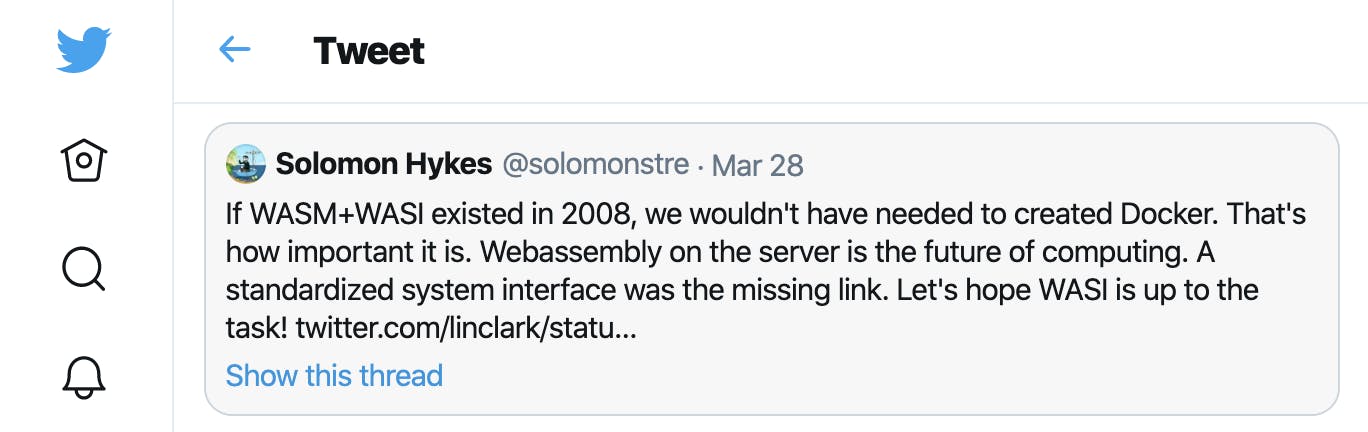

Security: Whatever gets to run in Wasm only talks to Wasm and not the machine. This can prevent malicious code from getting access to the machine. A very attractive benefits for FaaS providers. This might even eliminate the need for containers completely.

Portability: This is the most attractive to me as a developer; your Wasm binaries can work on any runtime, you can migrate between machines, platforms and providers without any fuss.

Interoperability: A unique advantage. Many programming languages can now compile to Wasm binaries thereby benefitting from the performance boost while still enjoying the features of one another. You can essentially bring the joy of writing a language together with the performance boost Wasm provides.

All of these result in lightweight, secure, portable applications that are also merciful on the bills. I hear that the folks at Fermyon spent only a fraction of their cloud cost using Spin (the Wasm framework for web services).

How to begin?

Many companies are already using WebAssembly to run things like serverless functions in the cloud.

To get started, you need to begin by picking a language that compiles well to WASM. The well-supported languages are C/C++, Rust, Golang, Grail, AssemblyScript among others. Then you pick a WebAssembly runtime that is built for your purpose.

So if you want to run it efficiently on IoT nodes and other edge devices, WasmEdge is great for that; if you wish to run it in the cloud for you backend API the Wagi-powered Spin framework is doing a great job; and if you don't want to manage Wasm runtimes, system integrations and so on, you could try WasmCloud's managed solution. You might even want to start a new blockchain network and you have to run smart contracts safely -- Wasm would work.

There is so much work being done to make Wasm hosts easy to adopt for on-premise, hybrid and public cloud use-cases. Tools are being contributed from folks at Microsoft, Google, Shopify and many others.

Wasm is doubtlessly great for the performance-critical cloud and there are great potentials to be unlocked.